错误异常

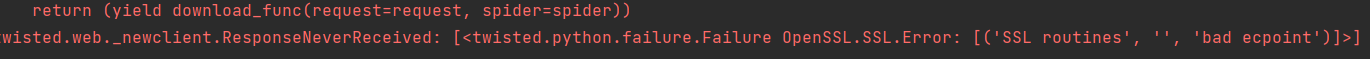

scrapy请求错误日志

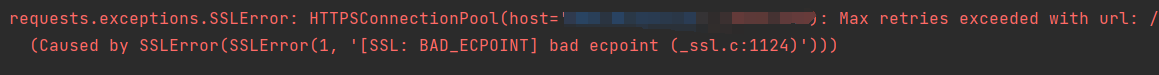

requests请求错误日志

猜测可能是指纹问题,然后尝试curl_cffi处理指纹问题,结果如下:

curl_cffi请求成功

解决办法

修改scrapy的中间件

在写的时候,我先去github去搜了一下,看看有没有已经完成的中间件,减少造轮子的次数,然后不出意外,就看到了https://github.com/tieyongjie/scrapy-fingerprint/ 这个项目

使用方法一(pip安装)

安装

pip install scrapy_fingerprint用法

创建 scrapy 项目后,通过在settings.py 中添加以下配置来添加代理

# proxy 链接配置

PROXY_HOST = 'http-dynamic-S02.xiaoxiangdaili.com'

PROXY_PORT = 10030

PROXY_USER = '******'

PROXY_PASS = '******'您还需要在 settings.py 中启用下载处理程序DOWNLOAD_HANDLERS

DOWNLOAD_HANDLERS = {

'http': ('scrapy_fingerprint.fingerprint_download_handler.'

'FingerprintDownloadHandler'),

'https': ('scrapy_fingerprint.fingerprint_download_handler.'

'FingerprintDownloadHandler'),

}您可以使用 scrapy。请求使用浏览器指纹发出请求

import scrapy

yield scrapy.Request(url=url, callback=self.parse)您还可以在 FingerprintRequest 中添加模拟

import scrapy

yield scrapy.Request(url, callback=self.parse, meta={"impersonate": "chrome107"})impersonate 默认为随机浏览器指纹

使用方法二

新建fingerprint_download_handler.py 文件,复制下面代码

#!/usr/bin/env python3

# -*- coding: UTF-8 -*-

'''

@Project :crawl

@File :fingerprint_download_handler.py.py

@IDE :PyCharm

@INFO :

@Author :BGSPIDER

@Date :15/11/2024 上午9:42

'''

import asyncio

import random

from time import time

from urllib.parse import urldefrag

import scrapy

from scrapy.http import HtmlResponse

from scrapy.spiders import Spider

from scrapy.http import Response

from twisted.internet.defer import Deferred

from twisted.internet.error import TimeoutError

from curl_cffi.requests import AsyncSession

from curl_cffi import const

from curl_cffi import curl

def as_deferred(f):

return Deferred.fromFuture(asyncio.ensure_future(f))

class FingerprintDownloadHandler:

def __init__(self, user, password, server, proxy_port):

self.user = user

self.password = password

self.server = server

self.proxy_port = proxy_port

if self.user:

proxy_meta = "http://%(user)s:%(pass)s@%(host)s:%(port)s" % {

"host": self.server,

"port": self.proxy_port,

"user": self.user,

"pass": self.password,

}

self.proxies = {

"http": proxy_meta,

"https": proxy_meta,

}

else:

self.proxies = None

@classmethod

def from_crawler(cls, crawler):

user = crawler.settings.get("PROXY_USER")

password = crawler.settings.get("PROXY_PASS")

server = crawler.settings.get("PROXY_HOST")

proxy_port = crawler.settings.get("PROXY_PORT")

s = cls(user=user,

password=password,

server=server,

proxy_port=proxy_port)

return s

async def _download_request(self, request):

async with AsyncSession() as s:

impersonate = request.meta.get("impersonate") or random.choice([

"chrome99", "chrome101", "chrome110", "edge99", "edge101",

"chrome107"

])

timeout = request.meta.get("download_timeout") or 30

try:

response = await s.request(

request.method,

request.url,

data=request.body,

headers=request.headers.to_unicode_dict(),

proxies=self.proxies,

timeout=timeout,

impersonate=impersonate)

except curl.CurlError as e:

if e.code == const.CurlECode.OPERATION_TIMEDOUT:

url = urldefrag(request.url)[0]

raise TimeoutError(

f"Getting {url} took longer than {timeout} seconds."

) from e

raise e

response = HtmlResponse(

request.url,

encoding=response.encoding,

status=response.status_code,

# headers=response.headers,

body=response.content,

request=request

)

return response

def download_request(self, request: scrapy.Request,

spider: Spider) -> Deferred:

del spider

start_time = time()

d = as_deferred(self._download_request(request))

d.addCallback(self._cb_latency, request, start_time)

return d

@staticmethod

def _cb_latency(response: Response, request: scrapy.Request,

start_time: float) -> Response:

request.meta["download_latency"] = time() - start_time

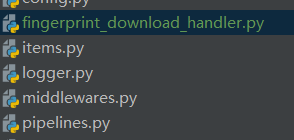

return response然后将文件丢到,下面图的位置

然后在setting.py文件中修改,将scrapy_fingerprint改成项目根目录名称即可,也不用pip安装了

DOWNLOAD_HANDLERS = {

'http': ('项目根目录名称.fingerprint_download_handler.'

'FingerprintDownloadHandler'),

'https': ('项目根目录名称.fingerprint_download_handler.'

'FingerprintDownloadHandler'),

}结果

状态码已经是200,说明已经成功了

Comments NOTHING